Introduction:

We often end up creating a problem while working on data. So, here are few best practices for data engineering using snowflake:

1.Transform your data incrementally:

A common mistake novice data engineers make is writing huge SQL statements that join, aggregate, and process many tables, misunderstanding that this is an efficient way to work. That’s it. In practice, the code becomes overly complex, difficult to maintain, and worse, often problematic. Instead, split the transformation pipeline into multiple steps and write the results to an intermediate table. This makes it easier to test intermediate results, simplifies code, and often produces simpler SQL code that runs faster.

2.Load data using COPY or SNOWPIPE:

Approximately 80% of data loaded into a data warehouse is ingested via regular batch processes, or increasingly as soon as the data files arrive. I’m here. Using COPY and SNOWPIPE is the fastest and cheapest way to load data. So, resist the temptation to periodically load data using other methods (such as querying external tables). In fact, this is another example of using the right tools.

3.Use multiple data models:

Local data storage is so costly that it was not possible to store multiple copies of data, each using a different data model to meet your requirements. However, when using Snowflake, store the raw data history in a structured or variant format, clean and fit the data using the third normal form or the Data Vault model, and It makes sense to store the final consumable data in the Kimball dimensional data model. Each data model has its own advantages and storing intermediate step results has significant architectural advantages. Especially important is the ability to reload and reprocess the data in the event of an error.

4.Choose a required Virtual Warehouse size:

Another tip from the Top 3 Snowflake Performance Tuning Tactics, don’t assume an X6-LARGE virtual warehouse will load massive data files any faster than an X-SMALL. Each physical file is loaded sequentially, and it therefore pays to follow the Snowflake File Sizing Recommendations and either split multi-gigabyte files into chunks of 100–250Mb or load multiple concurrent data files in parallel.

5.Keep raw data history:

Unless the data comes from a raw data lake, it makes sense to keep raw data history. This should ideally be stored in a VARIANT data type to benefit from automatic schema evolution. This means that data can be truncated and reprocessed if errors are found in the transformation pipeline, providing data scientists with a great source of raw data. If you don’t have a need for machine learning yet, you’ll almost certainly need it in the next few years, if not now.

6.Do not use JDBC or ODBC for normal large data loads:

Another recommendation for suitable tools. JDBC or ODBC interfaces may be suitable for loading several megabytes of data, but these interfaces cannot handle the massive throughput of COPY and SNOWPIPE. Use it, but don’t use it for normal large data loads.

7.Avoid scanning files:

When collecting data using the COPY command, use partitioned staging data files as described in Step 1 of Snowflake Top 3 Performance Optimization Tactics. This reduces the effort of scanning large numbers of data files in cloud storage.

8.Use the Tool according to requirement:

As the quote above suggests, if you only know one tool, it’s being used improperly. The decision is based on several factors, such as the skills available on your team, whether you need fast, near-real-time delivery, whether you are performing a one-time data load, or a process that repeats on a regular basis. must be Note that Snowflake can natively handle a variety of file formats including Avro, Parquet, ORC, JSON, and CSV. Please see online documentation for detailed instructions loading data into Snowflake.

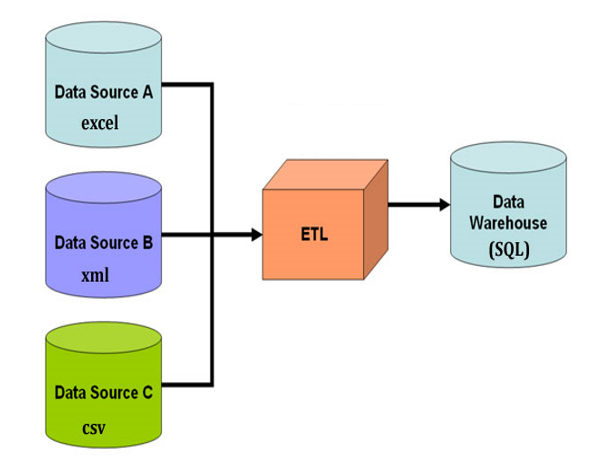

9.Ensure 3rd party tools push down:

ETL tools like Ab Initio, Talend and Informatica were originally designed to extract data from source systems into an ETL server, transform the data and write them to the warehouse. As Snowflake can draw upon massive on-demand compute resources and automatically scale out, it makes no sense to use have data copied to an external server. Instead, use the ELT (Extract, Load and Transform) method, and ensure the tools generate and execute SQL statements on Snowflake to maximize throughput and reduce costs.

10.Using Query Tag:

When starting a multi-step conversion task, set the session query tag using ALTER SESSION SET QUERY_TAG = “XXXXXX” and ALTER SESSION UNSET QUERY_TAG. This stamps an identifier on each SQL statement until it is rolled back, which is very important for system administrators. Each SQL statement (and QUERY_TAG) is logged in the QUERY_HISTORY view, allowing you to track job performance over time. This allows you to quickly see when a task change has degraded performance, identified inefficient conversion jobs, or indicated when jobs need to run better in large or small warehouses.

11.Use Transient Tables for Intermediate Results:

During complex ELT pipelines, write intermediate results to transient tables that may be truncated before the next load. This cuts the time travel storage down to just one day and avoids an additional 7 days of failsafe storage. Always use temporary tables where it makes sense. However, it is often useful to validate the results of intermediate steps in complex ELT pipelines.

12.Avoid row-by-row processing:

Modern analytics platforms such as Snowflake are designed to ingest, process, and analyze billions of rows at incredible speed using simple SQL statements that react to each data set It has been. However, people tend to think in terms of row-by-row processing, and this can lead to programming loops where he fetches and updates one row at a time. Note that row-by-row processing is the biggest way to slow query performance. Use SQL statements to process all table entries at once and avoid row-by-row processing at all costs.

13.Follow standard ingestion patterns:

This involves a multi-step process of storing data files in cloud storage and loading them into storage tables before transforming the data. Breaking down the entire process into defined steps makes it easier to orchestrate and test.

Conclusion:

In this blog, we have discussed few ways to deal with data using snowflake. Above mentioned are the best practices one should follow for data ingestion, transformation, batch ingestion and processing, continuous data streaming, and data lake exploration.

Leave A Comment